Code commentary

Aug. 12th, 2025 01:12 pmI rarely write about my work here. But today I think I will!

I've worked on many codebases, with very large numbers of contributors in some cases, and only a few in others. Generally when you make a contribution to a large codebase you need to learn the etiquette and the standards established by the people who came before you, and stick to those.

I've worked on many codebases, with very large numbers of contributors in some cases, and only a few in others. Generally when you make a contribution to a large codebase you need to learn the etiquette and the standards established by the people who came before you, and stick to those.

Not making waves - at least at first - is important, because along with whatever code improvements you may contribute when you join a project, you also bring a certain amount of friction along with you that the other developers must spend energy countering. Even if your code is great you may drag the project down overall by frustrating your fellow contributors. So act like a tourist at first:

- Be very polite, and keen to learn.

- Don't get too attached to the specific shape of your contribution because it may get refactored, deferred, or even debated out of existence.

- It won't always be like this, but no matter what kind of big-shot you are on other projects, it may be like this at first for this new one.

Let me put it generally: Among supposedly anti-social computer geeks, personality matters. There's a reason many folks in my industry are fascinated by epic fantasy world always on the brink of war: They are actually very sensitive to matters of honor and respect.

Anyway, this is a post about code commentary.

In one codebase I contributed to, I encountered this philosophy about code comments from the lead developers: "A comment is an apology."

The idea behind it is, comments are only needed when the code you write isn't self-explanatory, so whenever you feel the need to write a comment, you should refactor your code instead.

I believe this makes two wrong assumptions:

- The only purpose of a comment is to compensate for some negative aspect of the code.

- Code that's easy for you to read is easy for everyone to read.

The first assumption contradicts reality and history. Code comments are obviously used for all kinds of things, and have been since the beginning of compiled languages. You live in a world teeming with other developers using them for these purposes. By ruling some of them out you are expressing a preference, not some grand truth.

Comments are used to:

- Briefly summarize the operation of the current code, or the reasoning used to arrive at it.

- Point out important deviations from a standard structure or practice.

- Explain why an alternate, simpler-seeming implementation does not work, and link to the external factor preventing it.

- Provide input for auto-generated documentation.

- Leave contact information or a link to an external discussion of the code.

- Make amusing puns just to brighten another coder's day.

All of these - and more - are valid and when you receive code contributed by other people you should take a light approach in policing which categories are allowed.

The second assumption is generally based in ego.

I've been writing software for over 40 years, and I haven't abandoned code comments or even reduced the volume of the ones I generate, but what I have definitely done is evolve the content of them significantly.

I've developed an instinct over time for what the next person - not me - may have slightly more trouble unraveling. That includes non-standard library choices, complex logic operations that need to be closely read to be fully understood, architectural notes to help a developer learn what influences what in the codebase, and brief summaries at the tops of classes and functions to explain intent, for a developer to keep in mind when they read the code beneath. Because hey, maybe my intent doesn't match my code and there's a bug in there, hmmm?

The reason I do this is humility. I understand that even after 40 years, I am not a master of all domains. The code I write and the choices I made may be crystal clear to me, but not others. Especially new contributors: People coming into my codebase from outside. Especially people with less experience in the realm I'm currently working in. For the survival of a project, it's better to know when newcomers need an assist and provide it, than to high-handedly assume that if they don't understand the code instinctively, then they must be unworthy developers who should be discouraged from contributing, like by explaining what's going on you are "dumbing down" your code.

Along the same lines, it's silly to believe that your own time is so very valuable that writing comments in code is an overall reduction in your productivity.

You may object, "but what if the comments fall out of sync with the code itself, and other developers are actually led astray?"

I have two responses to this, and you may not like either one: First, if your comments are out of sync with the implementation it's either because your comments are attempting to explain how it works and the implementation has drifted, or your comments are explaining the intent behind the code, and the behavior no longer matches the intent. In the first case, the comment may potentially cause a developer to introduce a bug if they're not actually understanding the code. But if they're reading the code and they can't understand it because it's complex, then the commentary was justified, and it should be repaired rather than removed. (Or, you should refactor the code so you don't need to explain "how" so much.) In the second case, someone has already introduced a bug, and the comment is a means to identify the fix.

And second, if it feels like a lot of trouble to maintain your comments, then perhaps you write great code but you're not very good at explaining it in clear language to other humans. You should work on that.

If it's your project, you can make the rules, and if it's your code, then obviously it's clear to you. But if you want to work on a team, and have that team survive - and especially if you want to form a team around your own project - then you need a broader philosophy.

By the way, I should note that there are less severe incarnations of "a comment is an apology" out there. For example, "a comment is an invitation for refactoring". That's a handy idea to consider, though it still runs afoul of the reductionist attitude about the purpose of comments.

You should indeed always consider why you think a comment is necessary because it might lead to an alternate course of action. Even if that action has no effect in the codebase itself, like filing a ticket calling for a future refactor once an important feature gets shipped, it may be a better move. But this is an exercise in flexibility, and considering what you might have missed, rather than a mandate that code be self-explanatory enough to be comment-free (and an assumption that you personally are the best judge of that.)

Here's my own guidelines for writing comments. They're a bit loose, and they stick to the basics.

- Comments explain why, not how.

- Unless the how is particularly complicated. Then they explain how, but not what.

- Unless the what is obscure relative to the standard practice, in which case a comment explaining what might be useful.

- You learn these priorities as you go, and as you learn about a given realm of software development.

Always be thinking about the next person coming in after you, looking around and trying to understand what you've done. And, try to embrace the notes they're compelled to contribute as well.

[00:54:47.97] "I'm in a little bit of trouble. Oh yeah." (gibberish) (slurping) "Why isn't he dying? What is he eating in my ear?"

[00:54:47.97] "I'm in a little bit of trouble. Oh yeah." (gibberish) (slurping) "Why isn't he dying? What is he eating in my ear?" Search engines used to take in a question and then direct the user to some external data source most relevant to the answer.

Search engines used to take in a question and then direct the user to some external data source most relevant to the answer. People learning about evolution sometimes ask, "Why aren't animals immortal?" The answer is, the world keeps changing, and life needs to create new bodies to deal with it. What we really want when we ask for immortality is one constantly renewing body, running all the amazing interconnected systems that we're used to, and that convince us we are alive from one day to the next, without interruption. ... Well, except for sleep, which is a weird exception we have decided to embrace, since going without sleep really sucks.

People learning about evolution sometimes ask, "Why aren't animals immortal?" The answer is, the world keeps changing, and life needs to create new bodies to deal with it. What we really want when we ask for immortality is one constantly renewing body, running all the amazing interconnected systems that we're used to, and that convince us we are alive from one day to the next, without interruption. ... Well, except for sleep, which is a weird exception we have decided to embrace, since going without sleep really sucks. As a sort of last hurrah before my work schedule gets intense again, I played my way through

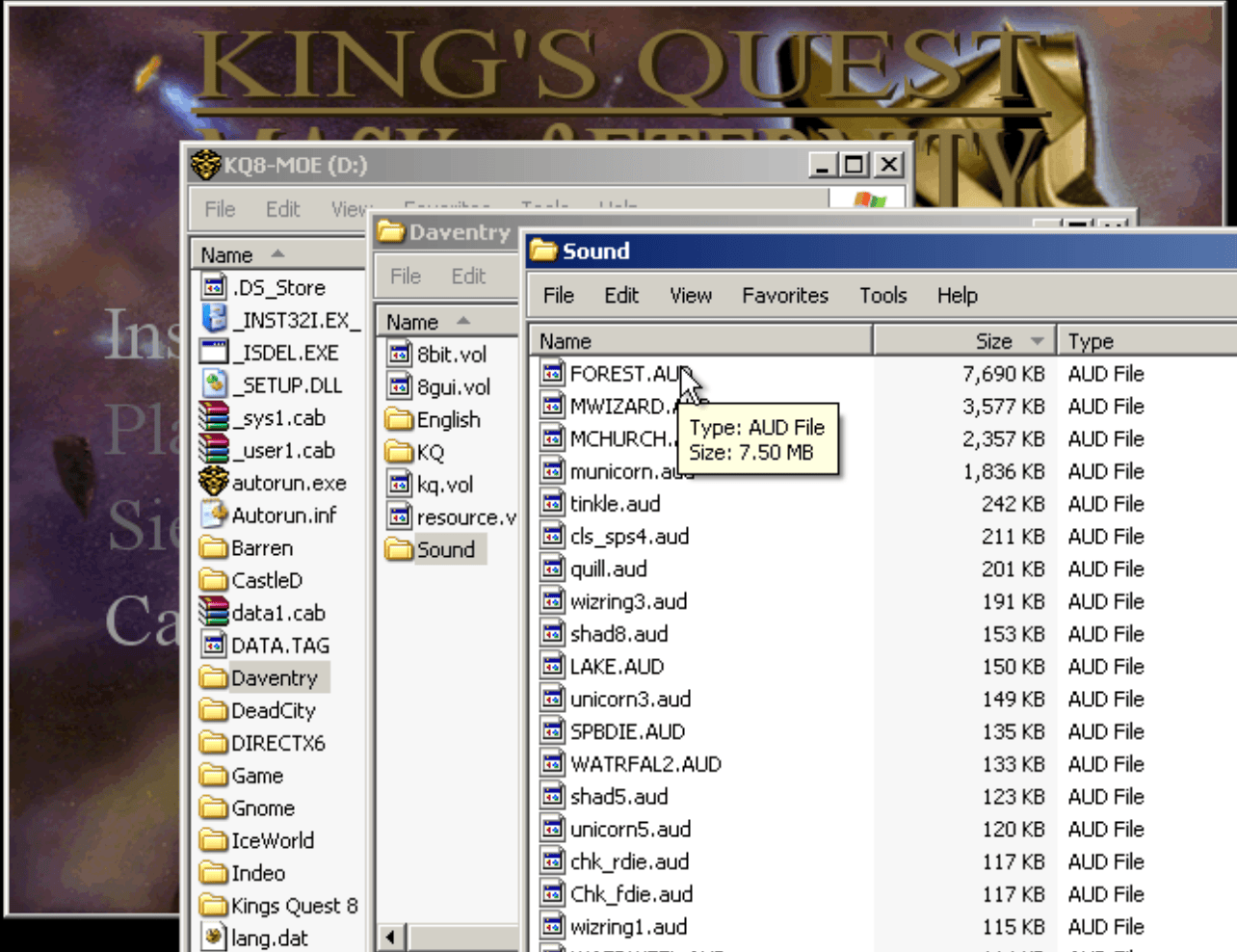

As a sort of last hurrah before my work schedule gets intense again, I played my way through

Then I realized, the only way to frame an answer is by considering the act of programming within the act of communicating via language, which is something humans have been doing in very complex forms since there were humans, and in less complex forms before that.

Then I realized, the only way to frame an answer is by considering the act of programming within the act of communicating via language, which is something humans have been doing in very complex forms since there were humans, and in less complex forms before that. This is not "intelligence". Whatever intelligence is active in this system is the intelligence that a human is bringing to the table as they manipulate a prompt in a generative tool to get some result, or change the way it classifies subsequent information.

This is not "intelligence". Whatever intelligence is active in this system is the intelligence that a human is bringing to the table as they manipulate a prompt in a generative tool to get some result, or change the way it classifies subsequent information.

For quite a while I've been looking for some nice way to get a complete backup of my Dreamwidth content onto my local machine. And I gotta wonder... Is this not a very popular thing? There are a lot of users on here, posting a lot of cool and unique content. Wouldn't they want to have a copy, just in case something goes terribly wrong?

For quite a while I've been looking for some nice way to get a complete backup of my Dreamwidth content onto my local machine. And I gotta wonder... Is this not a very popular thing? There are a lot of users on here, posting a lot of cool and unique content. Wouldn't they want to have a copy, just in case something goes terribly wrong?

So. Arrrr! Hello me swarthy crew, and welcome to the rant. Before we get fully under sail, I need to pause at the edge of the harbor and ask all hands a question:

So. Arrrr! Hello me swarthy crew, and welcome to the rant. Before we get fully under sail, I need to pause at the edge of the harbor and ask all hands a question: Meanwhile, software developers are charging you a monthly fee just to have their software installed on that device. Regardless of whether you even use it. And they are getting away with this. You can call that "what the market will bear", yes. Go ahead. But I call that a bloated piece-of-garbage market that is already too cozy for software makers.

Meanwhile, software developers are charging you a monthly fee just to have their software installed on that device. Regardless of whether you even use it. And they are getting away with this. You can call that "what the market will bear", yes. Go ahead. But I call that a bloated piece-of-garbage market that is already too cozy for software makers.